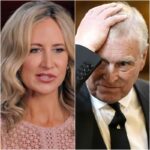

Bargain Hunt’s Christina Trevanion broke down in tears as she made the shocking revelation that she had been targeted by deepfake porn.

The TV personality, 43, described the ordeal in an appearance on BBC‘s Morning Live, which also drew attention to other victims of the worrying trend.

Deepfakes use AI to change images of individuals and create new clips, often of a pornographic nature, which is what happened to Christina.

She told the show: ‘I’m used to living life in the public eye. Often the reaction from the public has been kind and sweet and supportive but over the last couple of years there’s been a noticeable shift and at times it can be quite intrusive.

‘Last September I discovered my image had been used to create phony explicit videos known as deepfake porn. I was sent a very long list of sensitive urls where my head had been AI-ed onto pornographic videos and images.’

The Flog It! favourite detailed how the clips made her feel ‘stupid’ when she was sent them.

+3

View gallery

Bargain Hunt’s Christina Trevanion broke down in tears as she made the shocking revelation that she had been targeted by deepfake porn

+3

View gallery

The TV personality, 43, described the ordeal in an appearance on BBC’s Morning Live which also drew attention to other victims of the worrying trend

‘As it sunk in, it was deeply distressing,’ she said. ‘I felt naive, and stupid and utterly violated in every single way.’

The segment also highlighted the harrowing cases of deepfake porn which other individuals had fallen victim of.

One, who was given the pseudonym Jodie, was sent links to deepfake videos and images depicting her in sex scenes with multiple men in 2021.

She told the show: ‘I just felt like my whole world shattered around me, I felt that if someone saw these images, they looked very real and they might think they are real.

‘I felt my relationship might be on the line. Friends and family too, it really did feel that this could ruin my life. When I did find out who was behind them I was completely in shock, I was feeling suicidal.

‘This is a matter of male violence against women and we need to make sure victims have the option of having their images removed, ultimately that’s what many victims want.’

Meanwhile Christine, also a pseudonym, drew attention to the fact that deepfakes could still be shared legally, even though circulating intimate images was against the law.

She said: ‘The law commission report from 2022, they were less sure if the creation of these images were serious enough to criminalise because they thought if someone doesn’t know about it, there’s no harm caused.

+3

View gallery

The Flog It! favourite detailed how the clips made her feel ‘stupid’ when she was sent them, adding: ‘As it sunk in, it was deeply distressing’

‘This is something I found to be totally wrong.

‘The bottom line should be if a woman does not consent, that should be enough. (Her bill) covers the non-consensual taking, creation and solicitation to create sexually explicit images and video.

‘I’m hoping it sets a marker down that it is an act of abuse. If they keep the legislation as the lords passed it, (punishment) would be fine and prison as an option.’

Christine revealed the good news that she had managed to get most of the malicious content of her removed over the last few months, but admitted that the nightmare experience still stuck with her.

‘It’s something I will always have hanging over me and other victims,’ she added. ‘Seeing your own image being used without your consent feels like you’re being robbed of your freewill.’

News

SADNEWSBBC Breakfast host ANNOUNCES HEARTBREAKING D3ATH as tributes flood in

SADNEWSBBC Breakfast host ANNOUNCES HEARTBREAKING D3ATH as tributes flood in BBC Breakfast paid tribute to the legendary rugby league commentator…

HEARTBREAKING: Emmerdale star Beth Cordingly shared a POWERFUL MESSAGE es

HEARTBREAKING: Emmerdale star Beth Cordingly shared a POWERFUL MESSAGE es Beth Cordingly, known for her role as Ruby Milligan in Emmerdale,…

VIEWERS OUTRAGED: Emmerdale fans spot glaring plot holes as John escapes justice for his crimes – how long can he keep getting away with it?

VIEWERS OUTRAGED: Emmerdale fans spot glaring plot holes as John escapes justice for his crimes – how long can he…

DRAMA UNFOLDS: Robert turns detective as tensions rise—why was everyone so mean in this week’s explosive Emmerdale episode?

DRAMA UNFOLDS: Robert turns detective as tensions rise—why was everyone so mean in this week’s explosive Emmerdale episode? Our Emmerdale…

SURPRISE CONNECTION: EastEnders newcomer revealed to have famous relatives—can you guess which family members also starred in the BBC soap?

SURPRISE CONNECTION: EastEnders newcomer revealed to have famous relatives—can you guess which family members also starred in the BBC soap?…

STUNNING REVELATION: Coronation Street’s Carla uncovers SH0CKING CLUES about Becky—will she finally get Lisa the answers she’s searching for?

STUNNING REVELATION: Coronation Street’s Carla uncovers SH0CKING CLUES about Becky—will she finally get Lisa the answers she’s searching for? Carla…

End of content

No more pages to load